Robin O'Connell

Fanalyze Winter Events

Fanalyze Winter Events (formerly Project Javelin) was my capstone project at CSU Sacramento. I led a team including 7 other students to develop a cross-platform mobile application for a real world client, Fanalyze. The app is designed to help users navigate and access the vast amounts of data generated by the Olympic games. We accomplished this by pulling together multiple data sources, such as Data Sports Group's Olympics API and Twitter feeds, and tailoring the experience to each user's interests.

See below for a list of features and a summary of the development process and architecture. The video below demonstrates many of the app's features; I recommend watching it at 2x speed since it is paced to allow for commentary.

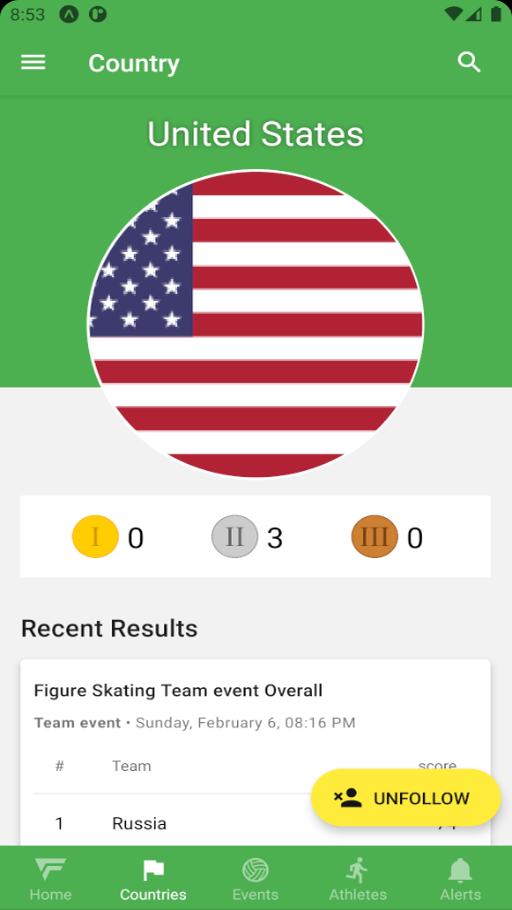

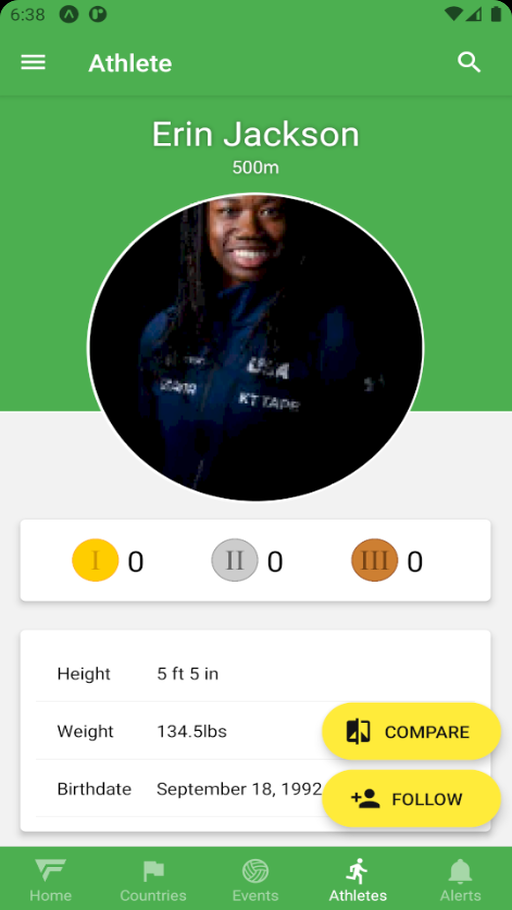

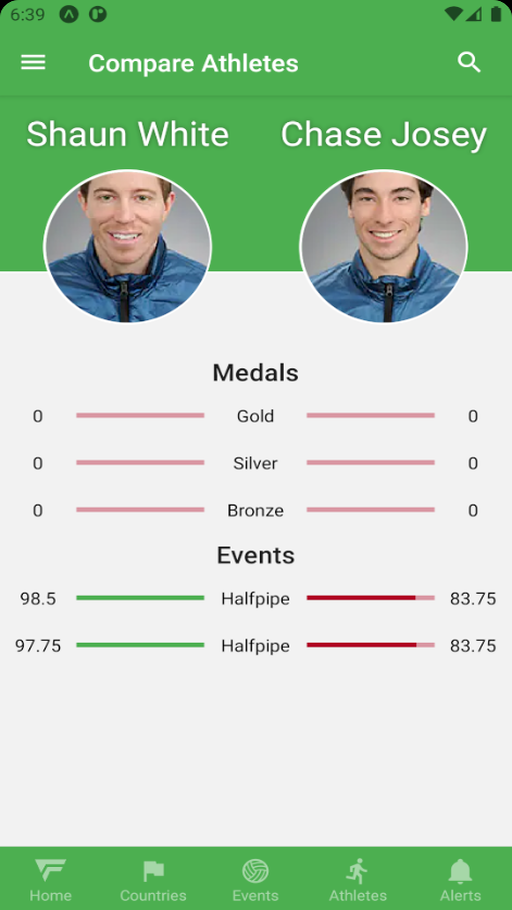

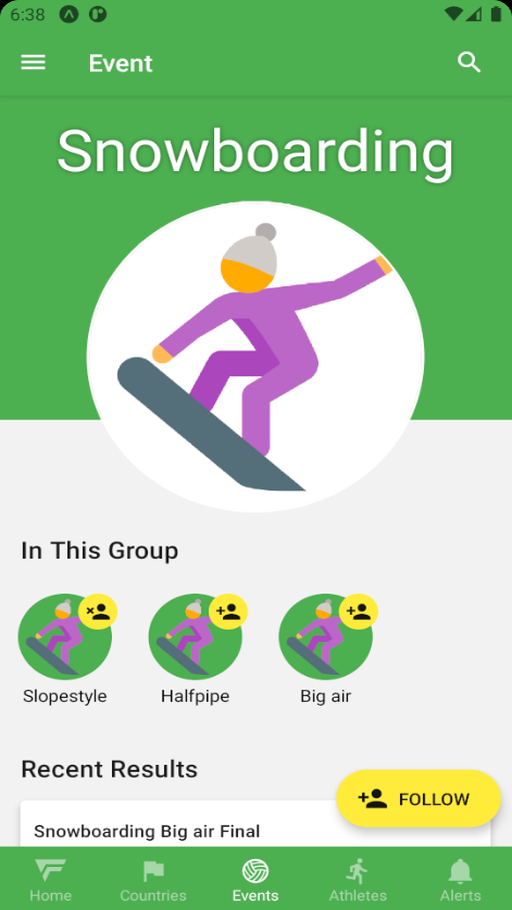

Screenshots

Tools & Technologies

Frontend

- TypeScript

- React Native/Expo

- React Navigation

- React Native Paper

- Axios

- React Query

Backend

- TypeScript

- Node/Express

- MongoDB/Mongoose

- Passport

- Data Sports Group API

- Heroku/AWS

Features

- Social login with Facebook, Google, and Apple

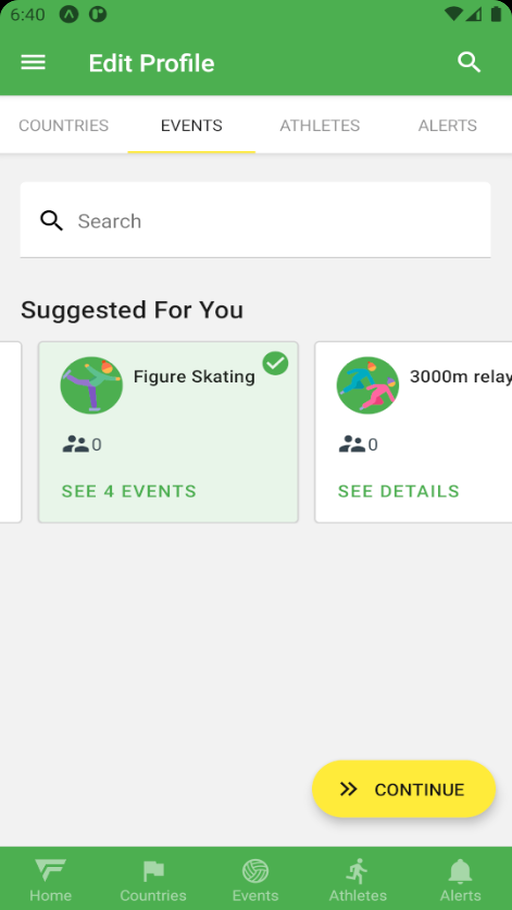

- Build a personal profile to receive timely and relevant results and notifications

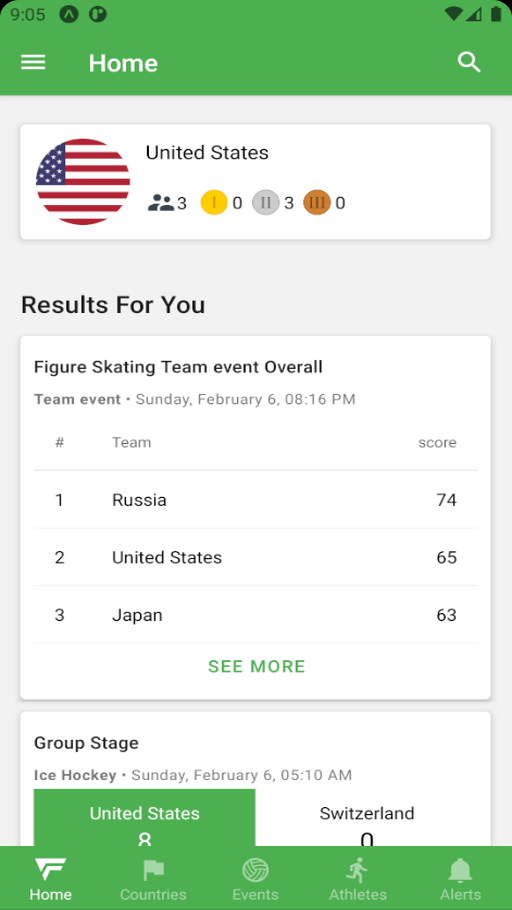

- Personalized results feed

- Search for countries, events, and athletes (with search suggestions and fuzzy search)

- View the dates and times of upcoming events

- View recent social media posts via Twitter integration

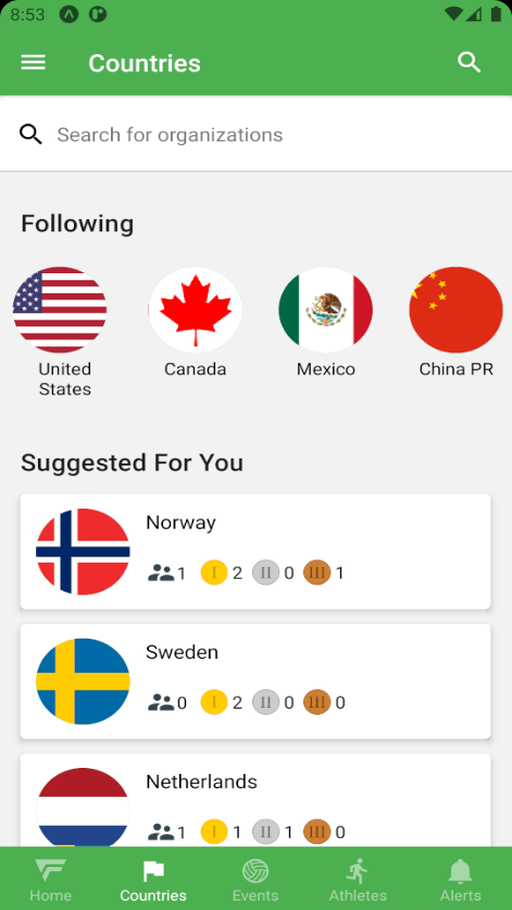

- View current medal counts for each country/athlete

- View athlete stats

- Compare athletes side-by-side

- Track the location of the Olympic torch

Process

Fanalyze Winter Events was designed and built over the course of two semesters using a (prescribed) hybrid waterfall and agile methodology. Early in the 2021 spring semester, the team self-assembled and began looking for a client. I was soon elected team leader for the initiative I showed in reaching out to potential clients and organizing the team—a role I took very seriously and would continue to grow into. Of the several clients who expressed interest in working with us, we chose Juan Juan and his company, Fanalyze, who was our earliest respondent.

During the spring, we met regularly with Juan, gathered and documented requirements, researched and evaluated technologies, and refined a series of prototypes. We started exploring the design space by creating static wireframes with draw.io. Eventually, we produced a detailed, interactive prototype in Adobe XD.

That summer, we built the Torch Tracker portion of the app, which enables users to follow along with the Olympic Torch Relay. This allowed the team to get their feet wet with the technologies we had selected—many of which were new to most, if not all of us. At the same time, I produced a study guide and set of short tutorials to help my teammates get up to speed. (As it happened, the Chinese Olympic Committee was quite secretive about the relay route to avoid attracting crowds during COVID, so this feature was ultimately cut.)

With the arrival of fall, the pressure was on to finish the app. We adopted a more agile-like process, using Flying Donut (similar to Jira) to plan and execute a series of 2-week sprints. As the team's leader and most experienced programmer, this was an intense time for me. In addition to implementing many features and being largely responsible for the app's architecture, I kept us on schedule, distributed the workload, facilitated collaboration, helped my teammates learn the tech stack and troubleshoot technical issues, communicated with our client and API providers, scheduled and conducted meetings, put out fires, and was generally involved in every aspect of the app's creation. (Not to mention I was taking other classes, too!)

While not every planned feature made it into the final product due to the tight schedule and other obstacles, we delivered a feature-packed app that was well-received by our client and our colleagues when we presented our work at the end of the year.

Architecture

Broadly speaking, Fanalyze Winter Events is a React Native app powered by a custom REST API we built with Express. Most of the data was provided by Data Sports Group.

Backend

One of our goals was for the system to be reusable for future Olympics. To this end, I decided to decouple from the DSG API as much as possible. Early on, we developed our own model of the data as it would be understood by the frontend. Then, we created an abstraction layer that translated the data from DSG to our model. (Huge thanks to Jeremy Persing who implemented this layer nearly single-handedly.) This had a few benefits. First, it allowed us to optimize the data for ease of use and smooth over inconsistencies or deficiencies in DSG's API. It also meant that if the API disappeared or changed, or if a competitor's service became a better option in the future, most of the system would be insulated from the change.

We stored the translated data in a Mongo database (hosted by Atlas) and updated it regularly with a Heroku Scheduler task. Using Express and Mongoose (an ODM for Mongo—the equivalent to an ORM for relational databases), we built a REST API for accessing the data, creating and modifying user profiles, and so on. We also implemented browser-based social authentication with Passport and JWTs. During development, the backend server was hosted on Heroku, but we later migrated to Amazon EC2.

API

I modeled the API after the JSON:API specification and largely followed RESTful principles. Each endpoint identifies either a single resource (such as an athlete or a country) or a collection of resources (a set of resources of the same type, such as all of the countries). Clients retrieve or change resources and collections by making HTTP requests with an appropriate verb—e.g. GET to retrieve or PATCH to update. Most of the resources are read-only; an exception is the user's profile.

A resource has at least these three properties, called a resource identifier:

_type: the type of the resource, such asathleteorcountry_id: an identifier for the resource that is unique among resources with the same type_href: the URL the resource can be retrieved from

A collection has only _type and _href, which together are called a collection identifier. Some resources also have a _links object that provides the URLs of related resources and collections.

The server responds to every request with JSON following this format:

{

data?: Resource | Resource[],

errors?: {

code: string,

title: string,

detail: string

}[],

included?: Resource[]

}

Every response has either the data field or the errors field. (The two are mutually exclusive.) The included field is explained next.

Many resources refer to other resources. For example, an athlete has a reference to the country they compete for. References are encoded by resource identifiers. If the client wants to retrieve information about a referenced resource, there are two options: make an additional request to the provided URL or ask for the resource be included in the initial response. The client can append the query param includes[] to any request to specify which referenced resources should be included. This helps reduce the number of requests that need to be made.

Frontend

We made the app itself using React Native and Expo. Expo is a set of cross-platform APIs and tools (such as a cloud-based build service) designed to support and expedite the creation of RN apps. We handled navigation and internal linking with React Navigation. For the basic UI, we used React Native Paper, a set of components that conform to Google's material design system. By taking advantage of RN Paper's theming capability and creating some generic container components, like <Section>, we were able to maintain a consistent look and feel on every screen.

For data fetching and state management, we used Axios and React Query (now TanStack Query). Axios is an HTTP client. It fulfills the role of the Fetch API available in browsers with some added bells and whistles. React Query has many uses, but primarily, it simplifies working with asynchronous state by fetching, caching, and refetching data as needed and by making it a breeze to handle loading and error states. It also enables state to be shared across the whole app—so, for instance, a component won't need to fetch data for a particular athlete if a component on the previous screen fetched it 30 seconds ago.

At startup, the frontend knows only the root URL of the API. It makes a GET request to that URL to discover the endpoints for authentication, profile management, athlete data, and so on, allowing maximum flexibility on the backend. (The frontend never constructs URLs itself.) For the most part, components don't use these endpoints directly. Instead, they access the data they need via custom hooks such as useAthlete, useUser, or the generic useResource and useCollection. Uniform query keys ensure that data is intelligently cached and shared between components.

Cache Mechanisms

I designed and implemented two interesting mechanisms that complement React Query's functionality. The first concerns the caching of collections and includes (described in the API section above). Consider a particular heat of the men's 100m dash that took place at 2:00 pm on Tuesday. When the apps fetches the results for that heat, it also requests the data for each athlete in the heat as an include. Suppose the user navigates to the details screen for one of those athletes. Normally, RQ wouldn't recognize that the data queried on the athlete screen is the same data that was included in the query made by the results screen because they have distinct query keys.

I addressed this limitation by iterating over each included entity and manually updating the corresponding cache entry. Likewise, when a collection is queried, the cache entry for each entity in the collection is updated. As a result, if the data for a particular entity (athlete, etc.) is fetched by any query, the data is "magically" reused/refreshed everywhere else.

The second mechanism is something I dubbed cache references. By and large, the frontend treats the API responses as plain old data, so it doesn't perform any object mapping. However, we did want easy access to related entities, such as being able to get detailed information about the country associated with an athlete. For this purpose, every API response is postprocessed to replace references to other resources (essentially IDs) with CacheReference objects. A CacheReference is a symbolic link to the other entity which can be accessed with the get method like so: athlete.country.get()?.name. This method retrieves the most recent data for that entity from the cache (as long as it's present, which it will be if it was requested by an include or any other query).